DE{CODE}: Why the Edge Isn’t an Edge Case

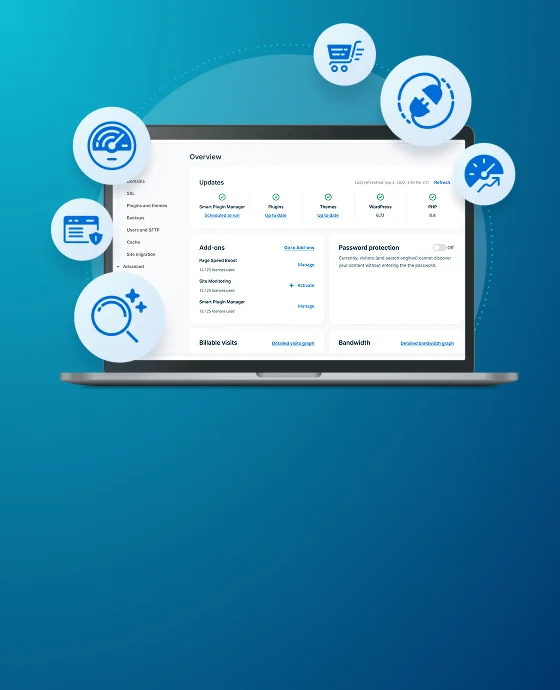

When you’re at the edge, speed, security, and server health cannot be an afterthought.

In this session, Cloudflare VP of Product Sergi Isasi and WP Engine Product Manager Pavan Tirupati discuss why having an edge-first mentality is essential to the success of each website you build or maintain

Session Slides

Full Text Transcript

PAVAN TIRUPATI: Hey, everyone. Thank you for joining us in this session about how age isn’t really an edge case. I’m Pavan Tirupati, Product Manager at WP Engine with the The Outreach team, and I’m primarily responsible for the security, performance, and reliability of the edge to grow and empower WP Engine customers.

And joining us today from Cloudflare is Sergi, VP of Product Management. Sergi, would you like to introduce yourself?

SERGI ISASI: Sure. Thanks for having me, Pavan. And thank you, everyone, for joining our session. So as Pavan said, I’m a VP of Product for Application Performance at Cloudflare, focused on the performance and reliability of our edge. We want to make things fast and reliable for all of our customers. Products I cover are how Cloudflare receives and processes traffic at the edge, so both at layer 4 and 7. So that includes our cache, our proxy, FL spectrum, fundamental technology to Cloudflare like our DNS systems, our certificate management systems, and our IP address management systems, and then also the new edge applications, so load-balancing, our brand new Waiting Room product, and our upcoming Web3 products.

I’ve been at Cloudflare for about 4 and 1/2 years. And again, happy to be here today.

PAVAN TIRUPATI: Awesome. And today, we have a wonderful session for you guys as we dig deeper into what exactly is edge and how is it useful, and as the title says, why the edge isn’t an edge case anymore. The agenda we have for you guys is a dig deeper into what edge is and what are the benefits of it. And given these times, it’s critical to focus more on security.

And we’re going to talk about some examples and talk about some security threats. We’ll also look at how will edge be beneficial for the audience that’s here and everyone who has digital presence in the world. And we’ll also look at some specific examples that might be beneficial for people who might be going through some of these security threats and issues.

So it’s going to be exciting, resourceful, and insightful. So let’s begin with setting some context here. I want to set some baseline of what is edge. And I think it is not a surprise to anyone when I say companies are experiencing a shift to a builder culture, one that is built on developers’ ability to directly create and control digital experiences.

As sites and applications move from monolithic builds to more of a microservices architecture, the ability to deliver content from diverse sources becomes increasingly important. And we know and understand the edge to be part of the internet that’s actually closest to our end users, sometimes also referred to as the last mile. But before I get into the specifics, Sergi, I want to level-set to the audience as to what is the edge and why is it even critical.

SERGI ISASI: Sure. So there’s a longtime saying in cloud computing, which is “cloud is just someone else’s computer.” I really like this saying. It means that it’s the same thing that you would have on your desktop or laptop, but it’s just someone else is managing it. And the edge is that exact same thing, it’s just closer to the user.

Why is that important? We want things to be– at Cloudflare — as close to the user as possible. And it really comes down to that statement you said, which is the last mile. So no matter how fast you make your software, how efficient you can make it, if you even respond to something– if your software can run in submillisecond, you’re still beholden to the speed of light. And if your software is not on the user’s device or as close to the user as possible, the user is going to experience that little bit of latency. And sometimes that latency is OK and sometimes it’s very, very jarring to the end user. So the point is to optimize what makes sense to be close to the end user on the edge or what’s close to the core.

And what Cloudflare does is we try to put everything on the edge. I think one of the reasons that you asked me to do this chat is because we run arguably one of the largest edge networks in the world and we’re obviously incredibly proud of it. Cloudflare is a bit over 10 years old and we’ve been building this network this entire time. It’s grown to be in 250 cities, 100 different countries, with the goal– and actually we’ve achieved this goal– of being within 50 milliseconds of 95% of internet users across the world. And that, again, last mile– if we can be within 50 milliseconds, we can be that much faster to each one of those end users.

The other bit of it is to connect to other networks. So we connect to 10,000 other networks across the world, lots of local ISPs, for example, and then we also operate our own backbone, so make the backhauling of that traffic for when we do need to go to core or to origin, make that even faster. We ended 2021 with a little over 100 terabits per second of a capacity. And that’s important when it comes to horizontal scaling for both increases in traffic for our customers and also increases of attacks on our customers and also on our own network.

One of the things that’s been interesting about compute over the last 30, 40 years is its transition, back and forth, from edge to core to client, depending on where it made sense and where all the compute power was at that point in time. So if you think all the way back to pre-public internet, you had mainframes. You had lots of compute power at the core and very little compute power at the edge and really small amounts of bandwidth to transition that back and forth. So you were sending commands to the mainframe and it would send you back the results of those commands in text.

We transitioned from there into lots of advancements on the endpoint so you got lots of fat clients– Windows, Microsoft Word, all of those things that you now did a lot of compute at the endpoint and then sent that out back to, typically, the core to share that content.

As the edge and the core got more strong, you started to see cloud apps. So rather than make that change on your device, you made the change in a web browser and that was propagated through other devices for sharing. And this became really important when we had mobile devices, especially early mobile devices which had less compute but a lot more bandwidth.

So why is this critical? It’s really all about user expectations of speed. So the user always wants a good user experience. And especially today, the idea of a good user experience is kind of instant interaction. I click on a link, I press a button, something happens, and I don’t really care where it happened. I might not even know where it happened, but I want it to be fast.

The other thing that’s changed is the environment that we find ourselves. So there is significantly more attacks, largely because those devices have become more powerful. And then we see a lot of changing regulations as security and privacy not only become top of mind for users but also for governments. And this is why Cloudflare keeps adding POPs. We see more users, we see more traffic, we see more attacks, and we see more use cases that we could put on the edge and make powerful for those end users.

PAVAN TIRUPATI: Awesome. Can we dig into pop a little bit? What is POP? And what has changed in the POPs over time? And specifically digging into the Cloudflare implementation of POP, what is unique?

SERGI ISASI: So thanks for bringing that back. I say POPs a lot, and I should specify what it means. It’s an internet Point of Presence. And in Cloudflare’s case and in most other cases, when you hear somebody talk about a POP, what it means is a stack of servers, sitting somewhere, that runs software.

As far as what’s changed over time, it’s actually easier to talk about what has changed versus what hasn’t. And we’ll go into that a bit. So we’re on our 11th generation of servers. We write about each one of those iterations on our blog. So we keep getting faster computers at the edge, which is great. It means lower costs, it means more capabilities, it means just generally better things for end users.

One of the interesting things that has changed over time is we’ve actually implemented on three different CPU architectures– or actually two different CPU architectures, three manufacturers. So we run both Intel and AMD, and we also run ARM as well on our edge.

The other thing that has changed over time is we just keep adding locations. It’s not clear to me how many we had when we launched 10-plus years ago. It was in the dozen range. But there’s a funny story of our CTO who was an early Cloudflare fan, knew our founders, but he refused to join Cloudflare until he got a POP close to where he was in Europe. He said, when is this coming up? And then I’ll join.

Our locations grew first based off of demand. So you see a lot of traffic in a region, it’s actually less expensive, generally, to put hardware in a region and serve traffic there. So we started doing that at first.

Once we got big, we started seeing local partners or ISPs start asking us to build hardware in the region to make things more efficient for them and their end users. So that was an interesting kind of sea change in Cloudflare’s world.

Our original goal was to be within 100 milliseconds of end users. And then we realized we could do better. So now we have the 50 millisecond goal. And I wouldn’t be surprised if you see that go even smaller as the years progress.

What hasn’t changed is that we, very early on, made a unique-to-us and pretty fateful choice, which is that we would run the same software on every edge server at every location. This ended up being an easier choice for most of our engineering teams. We know what’s running on each device and you can kind of troubleshoot and run things a little bit more efficiently there. Some of our engineering teams have a lot more work because of this as well.

It does make things a lot easier to scale, both long term and short term. In the short term, it allows us to move resources to different services as necessary depending on load and what’s happening at that location at that point in time. We can horizontally scale across every machine.

In the long term, it allows us to kind of proactively decide where new machines need to go because we know we need to run the entire stack. The other big advantage, for our engineering teams and specifically our product engineering teams, is we have consistent performances across services. We’re not worried about some location being closer to certain types of users and therefore it being faster and having a different experience. It’s going to be consistent across servers and across the world.

And one of the big changes we’ve had– this is probably three years old at this point– is we now allow our customers to run their code on our edge through our Workers product. And the nice advantage there is when that customer chooses to deploy their product, they actually select the region of the world. We don’t force them to say, I want to run in the US West or what have you. Their software is deployed throughout all locations and runs as close to their eyeball as possible.

PAVAN TIRUPATI: Great. So how does the edge compare to core?

SERGI ISASI: Sure. So it kind of depends on your architecture. And for some architectures, the edge is the core and the core is the edge. If you have just one place, you’re kind of doing everything at once.

Generally, though, the edge is going to be faster and more efficient for compute and the core is where you keep secrets and configuration and you push data from the core to the edge.

PAVAN TIRUPATI: And does Cloudflare have a core? And if it does, how is it implemented?

SERGI ISASI: From day one, yeah. And we don’t talk about it a lot. It’s kind of interesting. But if you think about it, we were founded in 2009, and so running everything at the edge was incredibly impractical in 2009 and, for some things, impractical now.

So what do we run at the core? Configuration management– so we have to push out software. and we have to do it from somewhere, so we still push the Cloudflare software, all of our new versions, we push our code, every day, from our core to our edge. And then we also run customer configuration that still talks to our core data centers. And it goes from there out to the edge. And it’s actually an interesting story here from WP Engine and our DNS software.

So in the early days, Cloudflare ran PowerDNS, open-source DNS software. And we started building something we internally call RR DNS, our own DNS software, in 2013. And a very efficient bit of software. We had some zones that were in the kind of hundreds of thousands of records and everything was moving along relatively well with those requirements. And then WP came along and they said that we have upwards of possibly a million records in our zone. And update speed, so the ability to make a change and push that out to our edge, was incredibly critical because it meant that a customer was being onboarded and that they needed to have that experience. And this was an actual edge case for us. So we looked at that and said, OK, we obviously need to rework how we manage our core and send that traffic out to the edge to handle both the size of that content and the speed and the frequency at which you update it.

So in 2016, one of our DNS engineers, Tom Arnfeld, asked if he could sit down with WP Engine to actually understand what you wanted and why you wanted it, and what it would look like in 2017, and what it would look like in 2022, now that we’re five years into this. And so what we did in 2017 was actually rewrote the entire data structures for our DNS software to make it, in the request of our CEO, to move data from the edge like magic. And it was actually one of those things where we had a customer with a need, we wanted to meet that need, but we had to rethink how we move data from the core to the edge.

Another thing that we still do at the core is analytics. So telemetry comes from the edge into core. Our customers, when they view their analytics, they go to a dashboard or an API, and that’s all being served from the core.

Over time, customer size and increased attack sophistication actually made us rethink how we did telemetry. We used to previously run, for example, all of our DDoS detection software at core. So telemetry would come in from the edge, the core would say, that looks like a DDoS, and it would send data back out to the edge to mitigate. That is sufficient for some DDoS attacks, but for others, we need to actually make that decision at the edge. So we augmented our original Gatebot system, which runs the core with a couple of new systems, mid last year, that actually run at the edge, and make decisions independent of core, and then report back, so kind of continually adapting to the attack surface.

The last thing that I’ll talk about at the core is we do most of our machine learning at core today. We lean heavily on machine learning for, specifically, security products. But we want to do more of that at the edge because we see, likely, a similar pattern with the DDoS system. So we partnered with NVIDIA to start running more of our ML at the edge as well.

PAVAN TIRUPATI: Sergi, you mentioned DDoS and security. I want to dig into that a little bit, specifically because security is highly critical. What are some of the trends and things you are seeing?

SERGI ISASI: Sure, so a bit of a broken record from us, but DDoS attacks are record-breaking. We break that record year over year. The reason for that is the botnets are actually growing in size and leveraging more powerful devices. So if you think about how much faster your cell phone is now or your computer is versus the year prior, it only makes sense that they’re just getting more and more capacity to both launch large attacks, so significant throughput, we fought off a “2 terabyte per second” attack a little while ago– It’s the second largest we’ve heard of– and then also smarter attacks that can do things without a lot of throughput, but maybe a lot of requests and expensive requests.

Really what we’re talking about here is more sophistication from attacks. And the stat that I think is most interesting, something we

just actually talked about, is that 8% of traffic on our edge is mitigated. So before we do any sort of rules or anything like that, 8% just is dropped altogether, which means that, for a customer who’s thinking about doing security at edge, they can quickly get rid of a lot of transactions and interaction to their application that they just flat out don’t want or need because it’s some sort of attack.

PAVAN TIRUPATI: Yeah, and at WP Engine, we’re trying to make Advanced Network which is one of our network offerings, to be default for all of our customers so they can leverage this additional layer of security. And we are also witnessing a never-before-seen growth with our security offering, GES, which is related– more attuned towards the customers who are looking for additional security levels and layers. And it comes with– GES is something that comes with a web application firewall and Argo Smart Routing.

But one thing I want to highlight here is 65% of WP Engine customers currently are not in any of these networks. Argo Smart Routing and WAF is something they could definitely benefit with. So would you mind expanding a little bit on how that smart routing and the WAF works on a Cloudflare perspective.

SERGI ISASI: Sure. So Argo is a very interesting product. It’s very unique to Cloudflare and it’s something that kind of is a little mindbending if you aren’t that familiar with it. So Argo takes that telemetry I was talking about, the edge telemetry, and actually looks for better routes across the internet. There’s a saying, internally, it’s like the Waze for the internet, which I guess kind of works. It’s not my favorite analogy, but it’s a reasonable one.

Because sometimes routes are inefficient and it’s not consistent. So today, it may be faster to go direct to origin and sometimes it’s not. Sometimes it makes more sense for us to actually go from one Cloudflare edge to another to kind of circumvent some internet congestion.

The big point of Argo is that it reduces last-mile efficiency both from the user to the edge and the edge to the origin– because you’re probably not serving all of your content from the edge today– by 40%. And that’s a massive increase by basically pressing a button and not requiring any sort of code change for the application.

PAVAN TIRUPATI: That’s actually quite insightful. Thanks, Sergi. What changes have you seen for your customer base? What is the practical impact of the increase in attacks and the actual surface of the attacks?

SERGI ISASI: So I think the big shift in 2020 and into 2021 is we started to see the rise of ransomware attacks and a different kind of ransomware, so not one that took over the endpoint and encrypted it, but rather we are going to attack you and take you down if you do not pay us.

In 2020, we saw quite a bit of those. In 2021, we saw an increase but a change in pattern. And the pattern change was, rather than kind of generically finding a target, it was finding a target in the same industry. So the interesting one is we saw a lot of voiceover IP and teleconference companies get targeted. Kind of makes sense, right? So as everyone was working remotely more, these services were critical. And it was important for both the users and the providers to stay online, so that the attacker had a very obvious target there.

One thing that still stays true is shared intelligence is important. While we were seeing each and every customer get targeted, we were seeing the same patterns go in and the same attack pattern going to those applications, which makes it easier for someone like us who sees that traffic– makes it easier for us to block.

PAVAN TIRUPATI: Yeah, predictability or patterns is actually good in understanding the data, so I get that. But how and where should the devs on this call be thinking about protection in general? What is the worst-case scenario you have seen in the past that you can share here?

SERGI ISASI: Sure. So a worst-case scenario is a focused attack. So if someone really wants to take you offline, it is extremely difficult to handle that kind of motivated attacker. So it’s something to consider if you’re running an application that is in some way controversial or can have some sort of enemy. And that’s lots of things these days.

The attack that I have here is an example of Adidas had 17.2 million requests per second. So this isn’t throughput, this is just an actual legitimate HTTP requests. These were not amplified or spoofed. So this attacker had access to enough devices that could make these connections and make them look legitimate– or actually, they were legitimate. Extremely distributed attack. It did have some concentration in some regions, but it was seen in the vast majority of our locations.

And the worst-case scenario is that the mitigation was expensive. It was done at layer 7. So we had to accept the connection. We had to terminate SSL– so that’s a number of handshakes going back and forth– before we could fend off and identify the attack versus legitimate traffic. So this is the sort of thing that, if you’re trying to run this on an on-premise WAF or something like that, it’s just going to be very, very expensive just to even find the traffic, much less mitigate it.

PAVAN TIRUPATI: Great. Thank you, Sergi. Sticking with security, during the times of war, like we are witnessing with Russia and Ukraine right now, there is an expected upsurge in cyber attacks. In fact, CIA and FBI have issued a joint advisory about the destructive nature of these attacks and how vulnerable critical assets and data can be during these times. They recommend that all organizations, regardless of size, adopt a heightened posture of security. And at WP, we are seeing this uptrend in attacks as well.

What’s your take on readiness for events like this? And how can we prepare for such situations? Some of the other big events other than the Russia-Ukraine war that comes to mind is Log4shell event that we witnessed last year, which impacted pretty much a lot of the applications around the world.

SERGI ISASI: Yeah, I mean, we have to respond. That’s the world that we’re in. Things happen, and really, really terrible things happen, and we have to react to them. As far as Ukraine goes, we can’t share a ton of information, but one of the things that we can share is, while traffic in Ukraine has remained relatively consistent from an overall user perspective, we have seen firewall mitigations go up considerably.

So it’s gone from a the typical 8% that we talked about earlier to as high as 30% of total requests. And so that means there’s just more attack traffic mixed in to regular user traffic. And again, just like the previous example, these are expensive mitigations, things that have to be done at layer 7 because it is difficult to identify them from regular attacks just based off of layer 4.

We talk about Log4shell, that was probably the biggest event that I can remember in a long time. So this hit the industry pretty hard. I remember lots of individuals, both at Cloudflare, reading the internal discussion, and I remember just going, oh, oh god, this is massive.

And it was a vulnerability and a very common bit of software that allowed the attacker to insert some arbitrary characters, and then the presence of those characters would cause the software to go and issue a git request for a URL that the attacker inserted. So everyone was scrambling. You may not know your dependencies. That’s kind of lesson one, is know your dependencies, know what software you’re running, and what software that software is running.

But the big thing was there was lots of really clever exploits here. So when we were mitigating this for our customers– and for your customers– we had lots of different variations of our firewall rules that kept on having to be deployed because there was the presence of content and different ways that you can encode that content.

One of the things that I thought was the most interesting thing the Log4j is we saw it in the logging pipeline. So even if you thought that your application was firewalled enough that it wouldn’t receive a connection from the outside world, if you pulled a log event that had these characters in it, it would still go and make that request out. So a simple firewall was not enough.

Edge is important here and very helpful because it allows you to have a quick and easy way to initiate controls regardless of whether you’re sure if you’re vulnerable or not. There’s no downside to putting the controls in place, which is another reason that we rolled it out even to our free customers. So the single control point is actually super helpful in that scenario.

PAVAN TIRUPATI: And what are some of the tools or techniques that are available for customers to scale traffic in this scenario?

SERGI ISASI: Sure, so for any scenario, we have workers on Cloudflare. This allows you to run your code on our edge and you can build whatever you want and not worry about scaling horizontally.

We also introduced a product, in early 2021, called Waiting Room. Waiting room is something you’re likely familiar with. You went to go buy something, and you were put into a queue to decide whether there was enough of that thing to buy. It can also work for an application. Can you connect to the site and have a good experience? Or you should you wait?

This is actually a really interesting product that we built. We built it on the edge and using Cloudflare workers. And that was difficult. It’s probably a simpler product to build at core. That’s not Cloudflare’s DNA. We can build things on edge, and really we were looking at scaling. If you try to scale something at core, it becomes a lot more difficult.

The big problem we had when we were building the waiting room is sharing state. So you wanted users to have one waiting room experience, globally. And we were talking about 200-plus locations. That’s not easy.

So I’ll give you an example. Let’s say there’s a concert here in the Bay Area. Most buyers for that concert are going to be in the Bay Area, and they would likely connect to our San Jose data center. However, some are not. You’re going to have a handful or some percentage of buyers that will be worldwide who fly in for the concert or maybe are traveling at that time.

So how do you make it fair? You can’t have a queue for users in the Bay Area and a queue for user is at every other location. That required us to think how can we share state across the edge. And I think this is where the future is kind of going for edge.

And we use our own Durable Objects product– you can kind of see it here on the slide– to sync and share state across all of the locations. But as we, as an industry, look to solve more of these problems on the edge, I think you’re going to start to see a lot more use cases of software at the edge where sharing state’s still hard, and what do you do about consistency, whether that’s kind of eventual or immediate? And I think that’s the future of our software.

PAVAN TIRUPATI: Great. Thank you, Sergi. I know, at WP Engine, we have this edge-first mentality to ensure that we are delivering performance and security to our customers. Sergi, what are your final thoughts or words of advice or top considerations or suggestions for the developers on the call who are building at the edge?

SERGI ISASI: So I think, first of all, you’re building at the right place. And secondly, I think it’s to be creative. There’s lots of things that if you had asked me, a year ago, whether we could do them at the edge, I would have said, eh, I don’t know. I don’t think so. And there’s just a faster pace of innovation that you’re finding here and lots of creative devs that are thinking about the problems that you’re thinking of and coming up with solutions both on the customer and product side.

Another interesting thing is kind of communicate and share. We’ve seen a lot of movement, particularly in developer Discords, of finding new, creative ways to solve problems and build more stuff at the edge. I think, lastly, as a plug for Cloudflare, if there’s something that you can’t do, find a Cloudflare product manager. Send us an email, find us on Twitter, or whatever, and let us know what you’re looking to build and we’ll see if we can’t help you build it.

PAVAN TIRUPATI: That’s awesome. And I think it’s fair to say that edge is no longer an edge case, because if security and performance is your focus, then edge is the place to be.

So thank you, Sergi, for those great words about edge. I hope you guys found this session useful. Thank you for taking the time and joining us. I hope you have a good rest of the day.

PRESENTER: And that is a wrap for DE{CODE} 2022. I hope you found it inspirational and are leaving with more WordPress expertise and new community connections. Look out for the recorded content on the site from Friday to catch up with anything you may have missed or watch a video again.

I want to say a final thank you to our sponsor partners– Amsive Digital, Box UK, Candyspace, Draw, Elementary Digital, Illustrate Digital, Kanopi Studios, Springbox, Studio Malt, StrategiQ, WebDev Studios, and 10Up. A massive thank you for donating to our DECODE fundraiser. We really appreciate your generosity.

Now for everybody that has been interacting with us in our Attendee Hub and our sessions, we will pick the top three winners and let you know how you can claim your prize at the end of DE{COD}E. We look forward to seeing you again are our future events, either in-person or virtually. We can’t wait to bring you more on the latest WordPress development trends and how you can implement them to build WordPress sites faster. That’s all from me. Thank you very much for joining us and take care.